#!/usr/bin/env python3

# -*- Mode: Python -*-

# ai_lecture-01.py

# Author : Marcel Turcotte & ChatGPT 5

# Created On : Tue Feb 13 16:29:41 2024

# Last Modified By: Marcel Turcotte

# Last Modified On: Thu Aug 28 15:03:14 EDT 2025

# In 2024, I developed the initial version of this script.

# This year, I used ChatGPT to revise the code to align with the most recent API version,

# enhance its educational value by incorporating detailed comments,

# and improve its suitability as an instructive example.

"""

Didactic example: translate EN->Canadian French, then synthesize audio in EN & FR.

CLI options:

--format : audio output format (mp3, wav, etc.)

--voice : TTS voice (e.g., nova, alloy, verse, etc.)

--model : TTS model (tts-1-hd for high quality, gpt-4o-mini-tts for low latency)

Examples:

python ai_lecture-01.py --format mp3 --voice nova --model tts-1-hd

python ai_lecture-01.py --format wav --voice alloy --model gpt-4o-mini-tts

"""

from __future__ import annotations

import os

import argparse

from pathlib import Path

from openai import OpenAI, APIError, APIConnectionError, APITimeoutError

# -------------------------------------------------------

# Configuration

# -------------------------------------------------------

client = OpenAI() # Reads OPENAI_API_KEY from environment

# -------------------------------------------------------

# Text Utilities

# -------------------------------------------------------

def translate_to_canadian_french(input_text: str) -> str:

"""Translate English text to Canadian French (CSI4106→CSI4506)."""

instructions = (

"You are a careful translator. Translate the user's English text into Canadian French. "

"Preserve technical terms and course names. "

'If the course code "CSI4106" appears, translate it as "CSI4506".'

)

try:

resp = client.responses.create(

model="gpt-4o",

instructions=instructions,

input=input_text,

temperature=0.2,

max_output_tokens=1200,

)

return resp.output_text or ""

except (APIConnectionError, APITimeoutError) as net_err:

print(f"[Network issue] {net_err}")

except APIError as api_err:

print(f"[OpenAI API error] {api_err}")

except Exception as e:

print(f"[Unexpected error] {e}")

return ""

# -------------------------------------------------------

# Audio Utilities

# -------------------------------------------------------

def synthesize_speech(

text: str,

output_path: Path,

*,

model: str = "tts-1-hd",

voice: str = "nova",

response_format: str = "mp3",

) -> bool:

"""

Stream synthesized speech to a file on disk.

Returns True on success, False otherwise.

"""

output_path.parent.mkdir(parents=True, exist_ok=True)

try:

with client.audio.speech.with_streaming_response.create(

model=model,

voice=voice,

input=text,

response_format=response_format,

) as response:

response.stream_to_file(str(output_path))

return True

except (APIConnectionError, APITimeoutError) as net_err:

print(f"[Network issue] {net_err}")

except APIError as api_err:

print(f"[OpenAI API error] {api_err}")

except Exception as e:

print(f"[Unexpected error] {e}")

return False

# -------------------------------------------------------

# Example script logic

# -------------------------------------------------------

def main(audio_format: str, voice: str, model: str) -> None:

"""Translate the course intro and synthesize EN & FR audio files."""

input_text_en = (

'Welcome to CSI4106, "introduction to artificial intelligence"! '

"In this course, you will learn about the roots and scope of Artificial Intelligence. "

"Knowledge and knowledge representation. Search, informed search, adversarial search. "

"Deduction and reasoning. Uncertainty in Artificial Intelligence. "

"Introduction to Natural Language Processing. Elements of planning. Basics of Machine Learning."

)

input_text_fr = translate_to_canadian_french(input_text_en)

if not input_text_fr:

print("[Warning] Translation failed; defaulting to English text only.")

speech_file_path_fr = Path(f"01_tts_course_description-fr-{voice}.{audio_format}")

speech_file_path_en = Path(f"01_tts_course_description-en-{voice}.{audio_format}")

if input_text_fr:

ok_fr = synthesize_speech(

input_text_fr, speech_file_path_fr, model=model, voice=voice, response_format=audio_format

)

print(f"[{'OK' if ok_fr else 'Error'}] FR audio → {speech_file_path_fr}")

ok_en = synthesize_speech(

input_text_en, speech_file_path_en, model=model, voice=voice, response_format=audio_format

)

print(f"[{'OK' if ok_en else 'Error'}] EN audio → {speech_file_path_en}")

if __name__ == "__main__":

if not os.getenv("OPENAI_API_KEY"):

raise RuntimeError("OPENAI_API_KEY is not set in environment or .env file.")

parser = argparse.ArgumentParser(description="Translate course intro and synthesize TTS audio.")

parser.add_argument(

"--format",

default="mp3",

choices=["mp3", "wav", "aac", "flac", "opus", "pcm"],

help="Audio output format (default: mp3).",

)

parser.add_argument(

"--voice",

default="nova",

help="TTS voice (default: nova). Try 'alloy', 'verse', etc.",

)

parser.add_argument(

"--model",

default="tts-1-hd",

choices=["tts-1-hd", "gpt-4o-mini-tts"],

help="TTS model: 'tts-1-hd' for high quality, 'gpt-4o-mini-tts' for low latency.",

)

args = parser.parse_args()

main(args.format, args.voice, args.model)Welcome to CSI 4106!

CSI 4106 - Fall 2025

Version: Sep 6, 2025 13:27

Preamble

Message of the day (MOTD)

- 2025-08 – Chief AI Officer, Cohere

- 2023-03 – 2025-05 VP AI Research, Meta

- 2017-05 – 2023-02 Director, Facebook Artificial Intelligence Research (FAIR), Montréal

- 2004-08 – Professor, McGill University

Cohere raises US$500-million, hires former Meta AI expert Joelle Pineau, Joe Castaldo, The Globe and Mail, 2025-08-14.

Learning objectives

- Clarify the proposition

- Discuss the syllabus

- Articulate the expectations

- Explore the various definitions of “artificial intelligence”

Proposition

Course overview

Calendar description

The roots and scope of Artificial Intelligence. Knowledge and knowledge representation. Search, informed search, adversarial search. Deduction and reasoning. Uncertainty in Artificial Intelligence. Introduction to Natural Language Processing. Elements of planning. Basics of Machine Learning.

Aims: Deep learning early

To the larger community of computer science and information technology, AI is usually identified by the techniques grown from it, which at different periods may include theorem proving, heuristic search, game playing, expert systems, neural networks, Bayesian networks, data mining, agents, and recently, deep learning.

- Deep learning is so dominant that I have chosen to structure everything around it

What does it means?

Good Old-Fashioned AI (GOFAI) relied on hand-crafted knowledge engineering, but it has been largely displaced by machine learning due to the increased availability of data, computing resources, and new algorithms.

Deep learning has significantly impacted various domains, including natural language processing, robotics, and computer vision.

However, deep learning has current limitations, particularly in reasoning, where symbolic AI excels and could potentially offer valuable insights.

But also

- In A Brief History of Intelligence (Bennett 2023), Max Bennett discusses significant milestones in the evolution of human intelligence and draws parallels to advancements in artificial intelligence (AI).

- Learning itself represents one of the earliest and most extensively understood milestones in the evolution of intelligence.

Aims: Applied

Andrew Ng, The Batch, April 10, 2024

Many software developers worry that large language models will make human coders obsolete. We doubt that AI will replace coders, but we believe that coders who use AI will replace those who don’t.

- Whenever possible, concepts will be introduced with code.

Aims: Academic rigour

In pursuing clarity and accessibility, this course aims to strike a balance between informal discourse and the precision required for academic rigour. The objective is for learners to not only grasp but also apply, evaluate, and critically analyze the concepts discussed throughout the course.

Syllabus

Course information

Web sites

Schedule

- Lectures: Mon 13:00-14:20 and Wed 11:30-12:50 CRX C240

- Office hours: Mon 15:00-16:20 STE 5106

- Official schedule: www.uottawa.ca/course-timetable

Grading

| Category | Percentage |

|---|---|

| Assignments | 40% (4 x 10%) |

| Quiz | 20% |

| Final examination | 40% |

Reading material

I will draw upon insights from the two comprehensive textbooks listed below, as well as relevant scientific publications. Additionally, all sources of information will be cited. For most people, I expect that my lecture notes will be sufficient.

- Russell, S., & Norvig, P. (2020). Artificial Intelligence: A Modern Approach (4th ed.). Pearson.

- Poole, D.L., & Mackworth, A.K. (2023) Artificial Intelligence: Foundations of Computational Agents (3rd ed.). Cambridge University Press. (Freely available online in HTML format)

The Campus Store has ordered a small number of copies of these books, for those interested.

We do not closely adhere to the framework proposed by (Russell and Norvig 2020) and (Poole and Mackworth 2023). Specifically, while these textbooks use the concept of an intelligent agent as a central theme, fields such as machine learning (ML), natural language processing (NLP), and vision operate as distinct communities. In these communities, problems are typically not framed in terms of agents.

Beta testers

This will be my second iteration of this content. Your help identifying what works and what doesn’t will be most appreciated.

Warnings

CSI 4106 is an introductory course on artificial intelligence, offering a brief overview of various topics within this broad field. Each topic covered could be explored in much greater depth through one or more graduate-level courses. The primary objective of CSI 4106 is to provide students with a foundational understanding of the core areas that constitute artificial intelligence.

Overlaps with other courses are inevitable, but I will do my best to keep it at a minimum.

This is not a course on the impact of AI on society, including ethics, fairness, trust and safety.

Setting the Stage: AI, Deep Learning, and Diverging Views on Intelligence.

AI, ML, DL

Schools of thought

- Symbolic AI (includes approaches based on logic)

- Connectionists (mostly neural networks)

Towers of Hanoi

(for your information only)

Symbolic AI (Planning)

The Towers of Hanoi is a puzzle that consists of three pegs and a number of disks of different sizes. The puzzle starts with all the disks stacked in decreasing size on one peg, and the goal is to move the entire stack to another peg, following these rules:

- Only one disk can be moved at a time.

- A disk can only be placed on top of a larger disk or on an empty peg.

D1, D2, D3, P1, P2, P3 are symbols, where D1, D2, and D3 are disks, and P1, P2, and P3 are pegs.

Symbolic AI (Kinship)

Given a few facts about who is parent of whom (symbols) and a handful of Horn-clause rules, infer new relations (e.g., ancestor, siblings, grandparent) by logical deduction.

Symbolic AI (Prerequisites)

Given course prerequisites and a student’s completed set, infer which courses they can take next. This shows symbolic constraint reasoning.

Symbolic AI

- “Their founding tenet held that knowledge can be represented by a set of rules, and computer programs can use logic to manipulate that knowledge.” (Strickland 2021)

- “Researchers developing symbolic AI set out to explicitly teach computers about the world.” (Strickland 2021)

- “(\(\ldots\)) a physical symbol system has the necessary and sufficient means for general intelligent action.”

(Newell and Simon 1976)

Symbolic AI

- What were the primary challenges associated with symbolic AI?

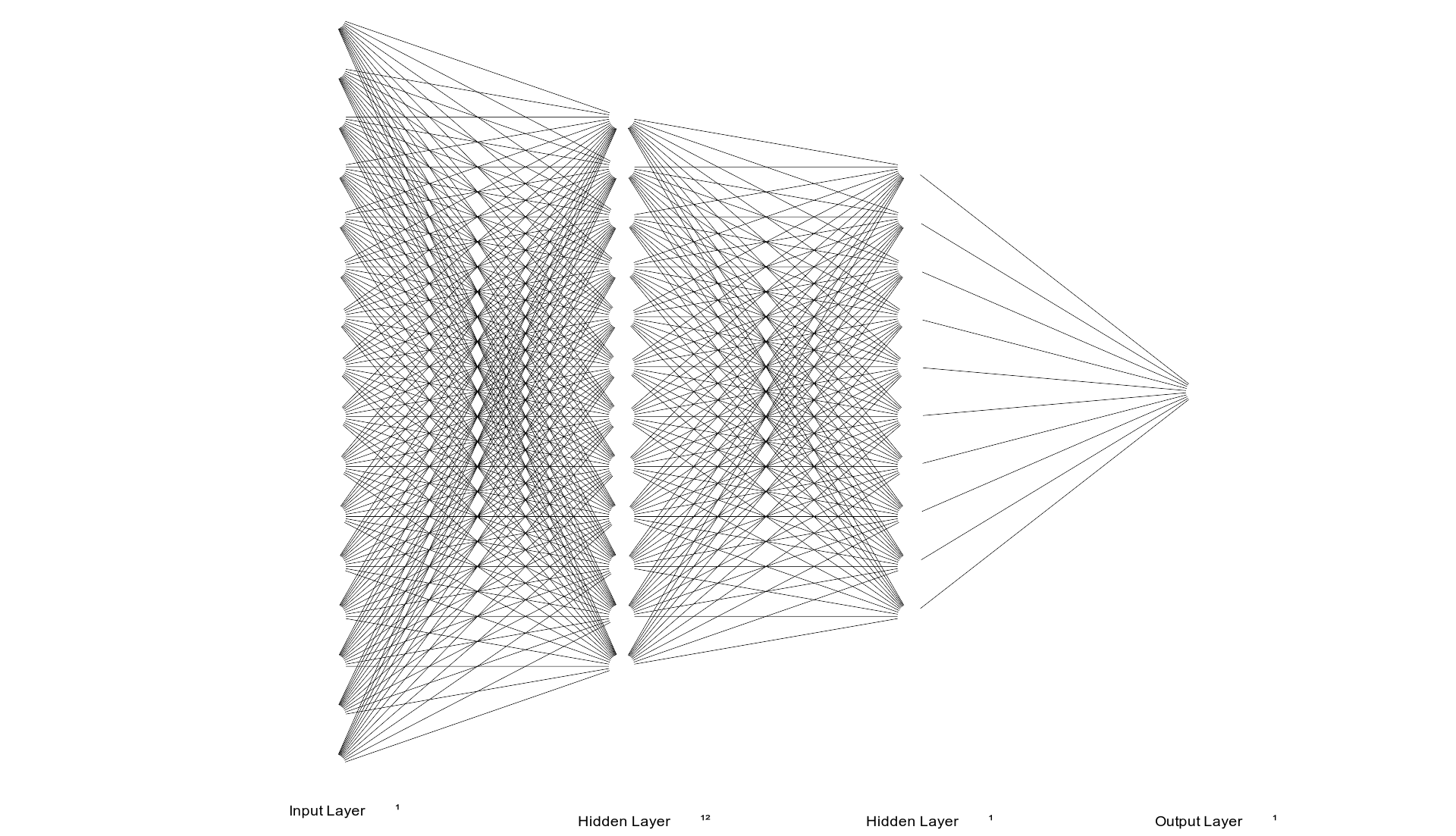

Connectionist

Inspired by biology, artificial neural networks (ANNs) are computational models designed to mimic the human brain’s network of neurons. They consist of layers of interconnected nodes (neurons), each connection having an associated weight.

ANNs process input data through these weighted connections, and learning occurs by adjusting the weights based on errors in the training data.

Connectionist

Definnig AI

Survey

Perceptions and Attitudes Toward Artificial Intelligence.

Why definning AI?

Enhancing Clarity and Communication: Establishing a mutual understanding of evaluation criteria, benchmarking tests, and milestones.

Addressing Ethical, Social, and Legal Implications: Enabling policymakers to develop regulations that minimize AI-related risks.

An operational definition

To the larger community of computer science and information technology, AI is usually identified by the techniques grown from it, which at different periods may include theorem proving, heuristic search, game playing, expert systems, neural networks, Bayesian networks, data mining, agents, and recently, deep learning.

Context

- Significant hype.

- Several leading companies, including DeepMind and OpenAI, are primarily focused on the development of artificial general intelligence (AGI).

- Should AGI become a reality, its implications could profoundly impact various aspects of our lives, including education, employment, economic structures, and scientific advancements.

Question

User: The man and the goat want to enjoy a picnic near a river, but there’s a wolf. What to do with the zucchini?

ChatGPT 5: You’ve got the classic river‐crossing (wolf–goat–cabbage ⇒ here, zucchini).

Boat carries you + one item. Unsafe pairings: wolf with goat; goat with zucchini.

Minimal plan:

- Take goat over.

- Return alone.

- Take wolf over.

- Bring goat back.

- Take zucchini over.

- Return alone.

- Take goat over.

Conclusion: keep the zucchini never alone with the goat; ferry it on step 5.

Attribution: Andriy Burkov, published on LinkedIn 3 weeks ago.

Question

User: Surgeon but child, hopsital, it have a no, why it so?

ChatGPT 5: You’re pointing to the classic riddle:

Car crash → injured child → surgeon says “I can’t operate—this is my child.”

Resolution: the surgeon is the child’s mother (or another parent in a non-heteronormative family).

The “paradox” arises from implicit gender assumptions about surgeons.

Attribution: Andriy Burkov, published on LinkedIn 3 weeks ago.

The complex nature of intelligence

“Artificial” pertains to the creation of entities or phenomena that mimic natural processes using technology or synthetic materials, a definition broadly recognized and accepted.

Therefore, defining “artificial intelligence” fundamentally requires us to first clarify what we mean by “intelligence.” Surprisingly, “[d]espite a long history of research and debate, there is still no standard definition of intelligence.” (Legg and Hutter 2007)

How do you define intelligence?

What are the characteristics you associate with intelligence?

“Intelligence is a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience.”

- Originally published in the Wall Street Journal in 1994, the content was reprinted in 1997: (Gottfredson 1997)

Essential abilities

No one knows where the borderline between non-intelligent behavior and intelligent behavior lies, in fact, to suggest that a sharp border exists is probably silly. But essential abilities for intelligence are certainly:

- to respond to situations very flexibly;

- to take advantage of fortuitous circumstances;

- to make sense out of ambiguous or contradictory messages;

- to recognise the relative importance of different elements of a situation;

- to find similarities between situations despite differences which may separate them;

- to draw distinctions between situations despite similarities which may link them;

- to synthesize new concepts by taking old concepts and putting them together in new ways;

- to come up with ideas which are novel.”

For certain complex concepts, drawing a clear boundary can prove challenging. Take the concept of life, for example. Humans, plants, and insects are considered living, as are microorganisms such as bacteria. However, viruses and viroids are not.

François Chollet, Creator of Keras

François Chollet

Real intelligence is not about mastering an individual skill, he argued, but about taking what has been learned and applying it to a new, different situation.

In his view, intelligence is the ability to efficiently acquire new skills that training did not prepare for, with the goal of accomplishing tasks that are sufficiently different from those a system has seen before.

The wider the scope of the new skills, the closer the computer comes to achieving artificial general intelligence.

“If you can make the learning process as information-efficient as a human mind, then you’ve got AGI,” Chollet said.

So far, machines lag far behind, approximately 10,000 times less efficient than human brains. For instance, it took millions of images to teach computers to recognize pictures of cats, whereas humans learn to identify them based on only one or two examples.

Savage (2024)

Thinking, acting, humanly, rationally

Russell & Norvig considers two axes: thinking vs behaviour, human vs rationality.

| Thinking | Acting | |

|---|---|---|

| Human-like | thinking humanly (simulation) | acting humanly (Turing test) |

| Rationality | thinking rationally (logic) | acting rationally (agent) |

Rationality

Rationality involves the evaluation of choices to achieve a goal or to find the optimal solution to a problem. Simon (1972, p. 161) defined rationality as “a style of behavior that is appropriate to the achievement of given goals, within the limits imposed by given conditions and constraints.”

Narrow vs General AI

Artificial General Intelligence (AGI)

Artificial general intelligence (AGI) refers to a form of artificial intelligence (AI) that either equals or exceeds human proficiency across a diverse array of cognitive functions.

AlphaFold (1, 2, & 3)

I repeat, there is nothing wrong with narrow AI.

«Two papers in this week’s issue dramatically expand our structural understanding of proteins. Researchers at DeepMind, Google’s London-based sister company, present the latest version of their AlphaFold neural network.»

- Jumper et al. (2021)

AI effect/paradox

(\(\ldots\)) as soon as a computer system is built to solve a problem successfully, the problem is no longer “only solvable by the human mind,” so does not need intelligence anymore. Consequently, “AI is whatever hasn’t been done yet” (Hofstadter, 1979; Schank, 1991), which is known as “the AI Effect” (McCorduck 2004).

Impact

Economical

McKinsey research estimates that gen AI could add to the economy between $2.6 trillion and $4.4 trillion annually while increasing the impact of all artificial intelligence by 15 to 40 percent.

In fact, it seems possible that within the next three years, anything not connected to AI will be considered obsolete or ineffective.

Subfields of AI

- Machine Learning: Credit card fraud detection

- Deep Learning: Image and facial recognition

- Natural Language Processing: Virtual assistants like Siri or Alexa

- Computer Vision: Autonomous vehicles

- Robotics: Industrial automation in manufacturing

- Expert Systems: Medical diagnosis support

- Speech Recognition: Voice-to-text transcription services

- Planning and Decision Making: Supply chain optimization

- Reinforcement Learning: Game AI in complex strategy games

- Knowledge Representation: Semantic web technologies for information retrieval

Our Final Invention

AI expert Kai-Fu Lee predicts that its impact will be “more than anything in the history of mankind.”

Questions

Can the concept of intelligence be considered independently of the entities that express it? This is the problem of embodiment.

Can a machine exhibit human-level intelligence?

Is it possible to dissociate the following concepts from that of intelligence?

- Agency.

- Sentience.

- Consciousness.

- Emotions.

- Language.

- Mind.

Can an AI suffer?

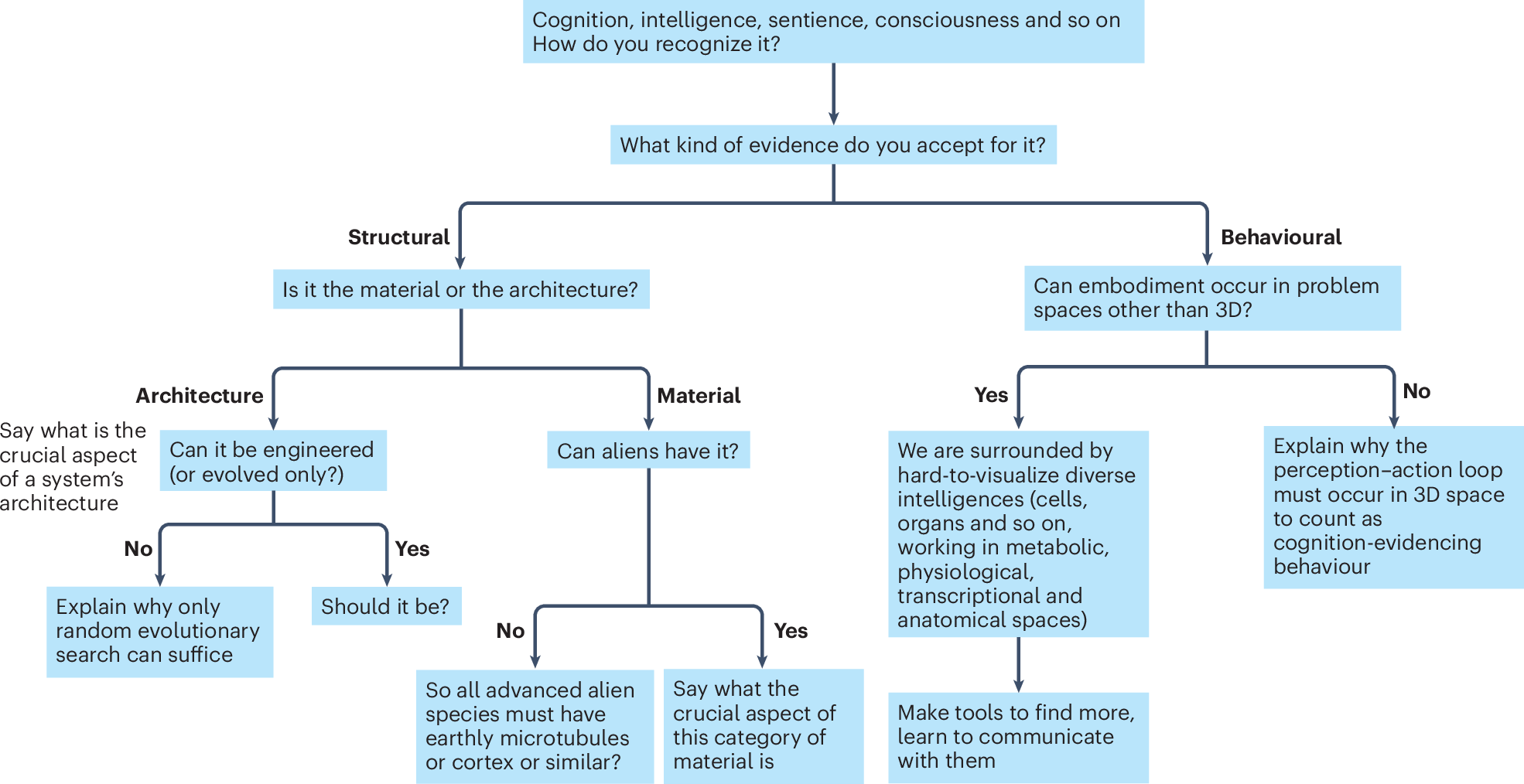

Deepen the Reflection

Rouleau, N. & Levin, M. (2024). Discussions of machine versus living intelligence need more clarity. Nature Machine Intelligence, 6(12), 1424–1426.

Prologue

Summary

- Discussed the syllabus

- Distinguish the concept of artificial intelligence from the concept of machine learning

- Distinguish symbolic AI from connectionnist AI

- Explored the various definitions of “artificial intelligence”

Next lecture

- Introduction to machine learning

References

Appendix: On Defining Artificial Intelligence

Wang (2019)

An agent and its interaction with the environment are specified as a tuple: \[ \langle P,S,A \rangle \] where

- \(P\) represents a sequence of input signals, \(P = \langle p_0,\ldots,p_t \rangle\)

- \(S\) represents a sequence of internal states, \(S = \langle s_0,\ldots,s_t \rangle\)

- \(A\) represents a sequence of actions, \(A = \langle a_0,\ldots,a_t \rangle\)

For a sequence of moments, \(0,\ldots,t\).

Human (H) vs Computer (C)

AI is conceived as computer systems that are similar to the human mind in a certain sense, though a computer and a human mind cannot be identical in all aspects.

\[ \langle P^H,S^H,A^H \rangle \approx \langle P^C,S^C,A^C \rangle \]

Wang (2019) proposes 5 perspectives: Structure-AI, Behavior-AI, Capability-AI, Function-AI, and Principle-AI.

1. Structure-AI

(brain modelling, cognitive science)

I call this type of definition “Structure-AI,” since it requires an AI system to go through isomorphic states or structure changes as the brain does when they are given similar input, which will produce similar output, so the three components of the two are pairwise similar to each other:

\[ P^H \approx P^C, S^H \approx S^C, A^H \approx A^C \]

2. Behaviour-AI

(Turing Test)

One way to acknowledge a human-like mind without demanding a human-like brain is to associate intelligence to the external behaviors of the agent. After all, if an agent behaves like a human, it should be considered as intelligent, no matter whether it looks like a human, either inside or outside.

\[ P^H \approx P^C, A^H \approx A^C \]

3. Capability-AI (Employment Test)

In the agent framework, it means that \(C\) is similar to \(H\) in the sense that there are moments \(i\) and \(j\) that:

\[ p_i^C \approx p_j^H, a_i^C \approx a_j^H \]

the action (solution) the computer produces for a percept (problem) is similar to the action produced by a human to a similar percept (\(\ldots\)) In this way, the intelligence of a system is identified by a set of problems it can solve, while whether they are solved in the “human way” does not matter.

Capability-AI (contd)

“I suggest we replace the Turing test by something I will call the ‘employment test.’ To pass the employment test, AI programs must be able to perform the jobs ordinarily performed by humans. Progress toward human-level AI could then be measured by the fraction of these jobs that can be acceptably performed by machines”

4. Function-AI

In the agent framework, this “Function-AI” perspective takes \(C\) to be similar to \(H\) in the sense that there are moments \(i\) and \(j\) that:

\[ a_i^C \approx f^C(p_i^C), a_j^H \approx f^H(p_j^H), f^C \approx f^H \]

Here the function can correspond to searching, reasoning, learning, etc., and since the focus is on the functions (i.e., input-output mappings), the concrete input and output values of the two agents do not have to be similar to each other.

6. Principle-AI (rationality, logicist)

As in any field, there are researchers in AI trying to find fundamental principles that can uniformly explain the relevant phenomena. Here the idea comes from the usage of intelligence as a form of rationality (\(\ldots\)) that can make the best-possible decision in various situations, according to the experience or history of the system.

\[ A^C = F^C(P^C), A^H = F^H(P^H), F^C \approx F^H \]

The above \(F\) is often not formally specified, but described informally as a certain “principle,” which is not merely about a single type of problem and its solution, but about the agent’s life-long history in various situations, when dealing with various types of problems.

Code of the day

Marcel Turcotte

School of Electrical Engineering and Computer Science (EECS)

University of Ottawa