Softmax, cross-entropy, regularization

CSI 4106 - Fall 2025

Version: Oct 28, 2025 17:11

Preamble

Message of the Day

Learning objectives

- Explain the role of softmax and cross-entropy loss in measuring the dissimilarity between predicted and true probability distributions.

- Explore methods like L1, L2 regularization, and dropout to enhance neural networks’ generalization capabilities.

Output Layers

Output Layer: Regression Task

- # of output neurons:

- 1 per dimension

- Output layer activation function:

- None, ReLU/softplus, if positive, sigmoid/tanh, if bounded

- Loss function:

Output Layer: Multi-Label

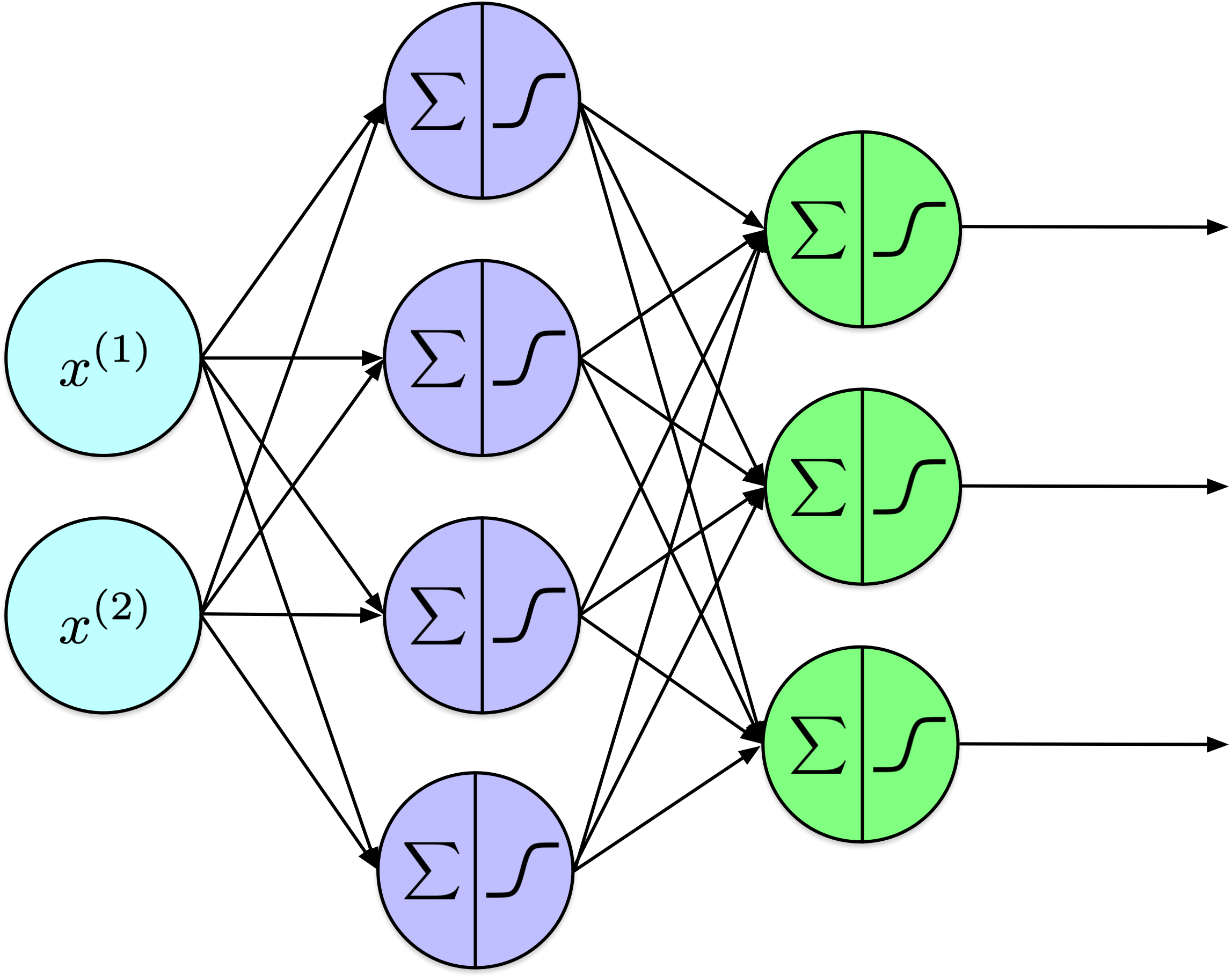

Output Layer: Classification Task

- # of output neurons:

- 1 if binary, 1 per class, if multi-label or multiclass.

- Output layer activation function:

- sigmoid, if binary or multi-label, softmax if multi-class.

- Loss function:

- cross-entropy

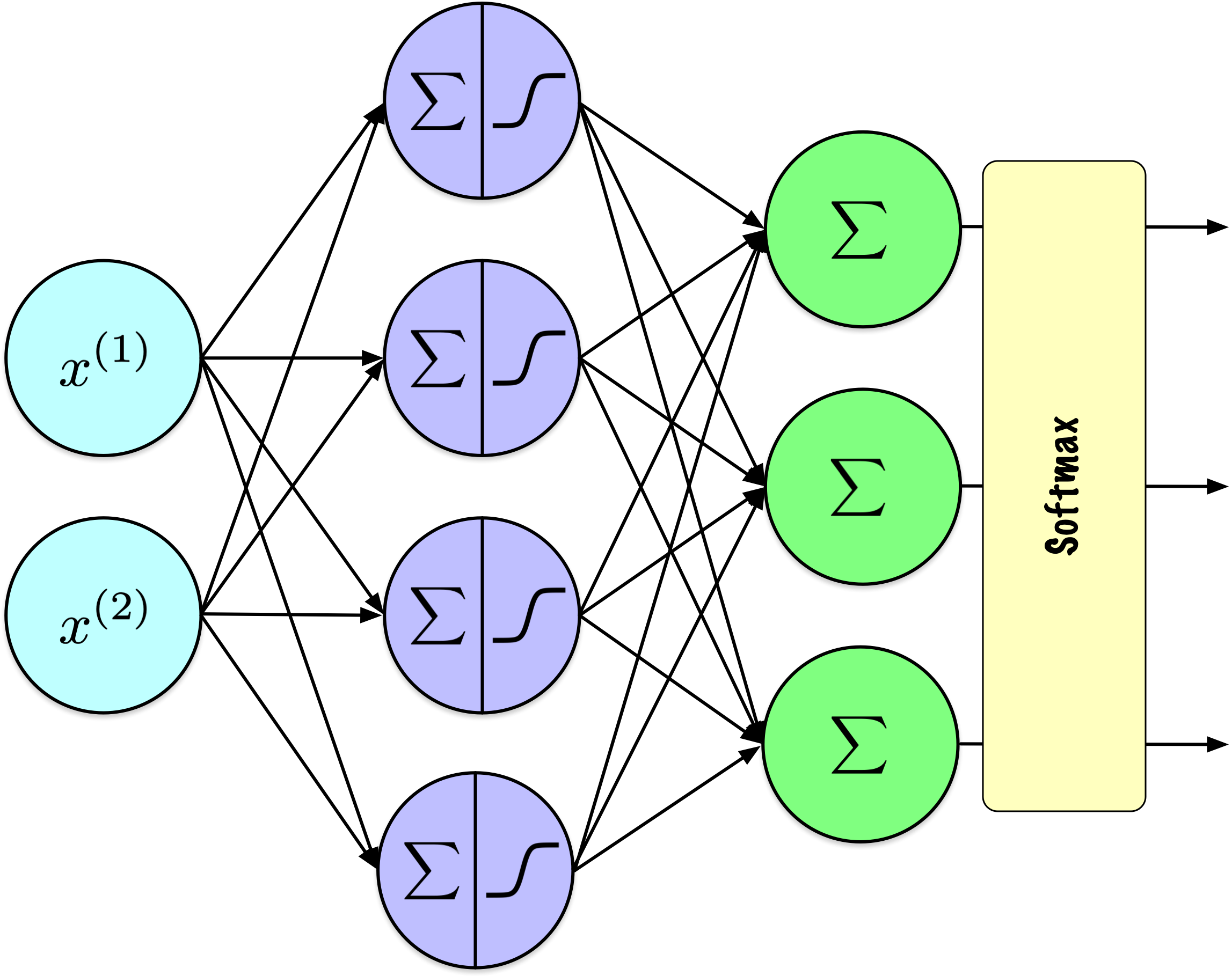

Softmax

Softmax

The softmax function is an activation function used in multi-class classification problems to convert a vector of raw scores into probabilities that sum to 1.

Given a vector \(\mathbf{z} = [z_1, z_2, \ldots, z_n]\):

\[ \sigma(\mathbf{z})_i = \frac{e^{z_i}}{\sum_{j=1}^{n} e^{z_j}} \]

where \(\sigma(\mathbf{z})_i\) is the probability of the \(i\)-th class, and \(n\) is the number of classes.

Softmax

| \(z_1\) | \(z_2\) | \(z_3\) | \(\sigma(z_1)\) | \(\sigma(z_2)\) | \(\sigma(z_3)\) | \(\sum\) |

|---|---|---|---|---|---|---|

| 1.47 | -0.39 | 0.22 | 0.69 | 0.11 | 0.20 | 1.00 |

| 5.00 | 6.00 | 4.00 | 0.24 | 0.67 | 0.09 | 1.00 |

| 0.90 | 0.80 | 1.10 | 0.32 | 0.29 | 0.39 | 1.00 |

| -2.00 | 2.00 | -3.00 | 0.02 | 0.98 | 0.01 | 1.00 |

Softmax

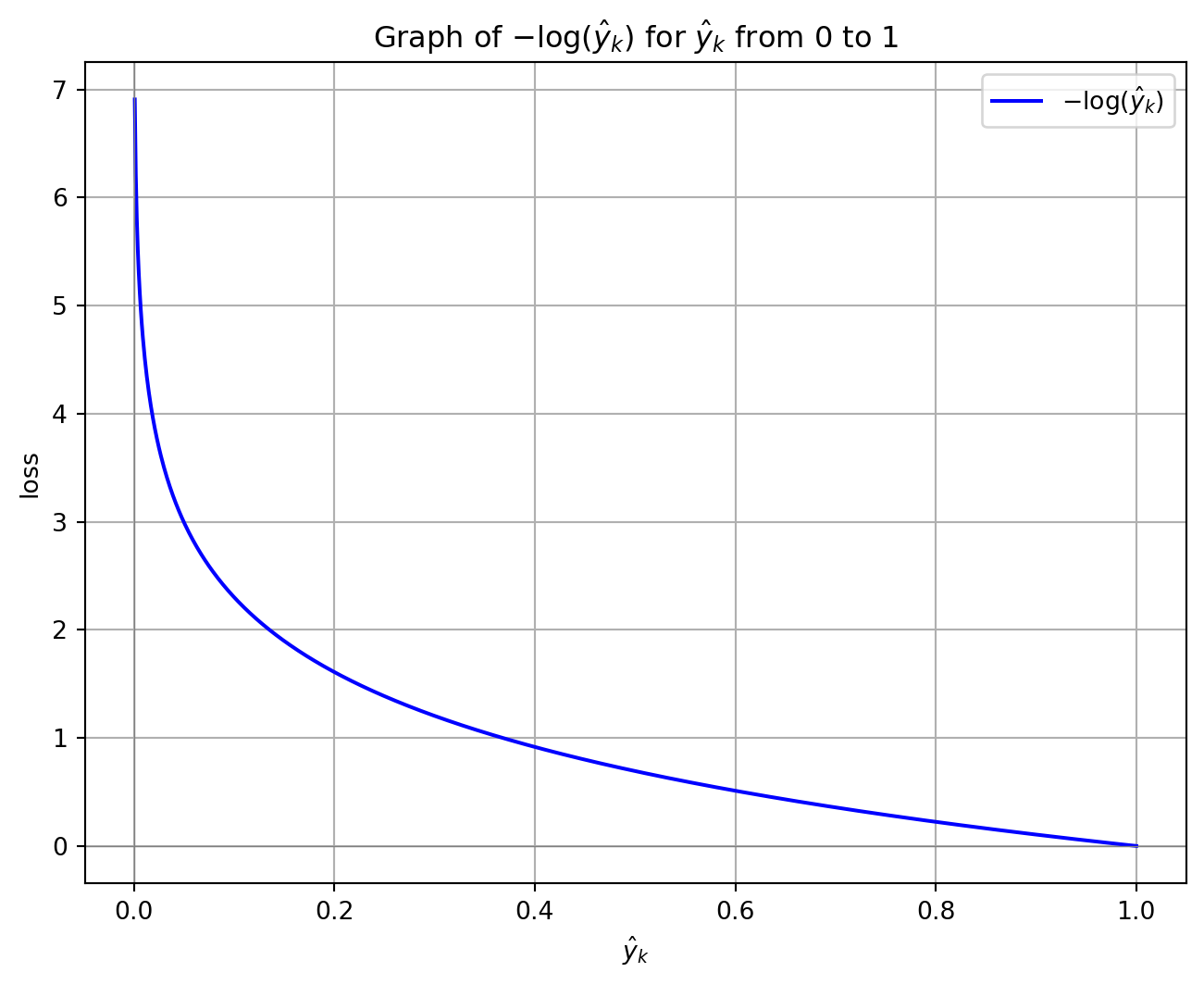

Cross-entropy loss function

The cross-entropy in a multi-class classification task for one example:

\[ J(W) = -\sum_{k=1}^{K} y_k \log(\hat{y}_k) \]

Where:

- \(K\) is the number of classes.

- \(y_k\) is the true distribution for the class \(k\).

- \(\hat{y}_k\) is the predicted probability of class \(k\) from the model.

Cross-entropy loss function

- Classification Problem: 3 classes

- Versicolour, Setosa, Virginica.

- One-Hot Encoding:

- Setosa = \([0, 1, 0]\).

- Softmax Outputs & Loss:

- \([0.22,\mathbf{0.7}, 0.08]\): Loss = \(-\log(0.7) = 0.3567\).

- \([0.7, \mathbf{0.22}, 0.08]\): Loss = \(-\log(0.22) = 1.5141\).

- \([0.7, \mathbf{0.08}, 0.22]\): Loss = \(-\log(0.08) = 2.5257\).

Case: one example

Case: dataset

For a dataset with \(N\) examples, the average cross-entropy loss over all examples is computed as:

\[ L = -\frac{1}{N} \sum_{i=1}^{N} \sum_{k=1}^{K} y_{i,k} \log(\hat{y}_{i,k}) \]

Where:

- \(i\) indexes over the different examples in the dataset.

- \(y_{i,k}\) and \(\hat{y}_{i,k}\) are the true and predicted probabilities for class \(k\) of example \(i\), respectively.

Regularization

Definition

Regularization comprises a set of techniques designed to enhance a model’s ability to generalize by mitigating overfitting. By discouraging excessive model complexity, these methods improve the model’s robustness and performance on unseen data.

Adding penalty terms to the loss

In numerical optimization, it is standard practice to incorporate additional terms into the objective function to deter undesirable model characteristics.

For a minimization problem, the optimization process aims to circumvent the substantial costs associated with these penalty terms.

Loss function

Consider the mean absolute error loss function:

\[ \mathrm{MAE}(X,W) = \frac{1}{N} \sum_{i=1}^N | h_W(x_i) - y_i | \]

Where:

- \(W\) are the weights of our network.

- \(h_W(x_i)\) is the output of the network for example \(i\).

- \(y_i\) is the true label for example \(i\).

Penalty term(s)

One or more terms can be added to the loss:

\[ \mathrm{MAE}(X,W) = \frac{1}{N} \sum_{i=1}^N | h_W(x_i) - y_i | + \mathrm{penalty} \]

Norm

A norm is assigns a non-negative length to a vector.

The \(\ell_p\) norm of a vector \(\mathbf{z} = [z_1, z_2, \ldots, z_n]\) is defined as:

\[ \|\mathbf{z}\|_p = \left( \sum_{i=1}^{n} |z_i|^p \right)^{1/p} \]

\(\ell_1\) and \(\ell_2\) norms

The \(\ell_1\) norm (Manhattan norm) is:

\[ \|\mathbf{z}\|_1 = \sum_{i=1}^{n} |z_i| \]

The \(\ell_2\) norm (Euclidean norm) is:

\[ \|\mathbf{z}\|_2 = \sqrt{\sum_{i=1}^{n} z_i^2} \]

\(\ell_1\) and \(\ell_2\) regularization

Below, \(\alpha\) and \(\beta\) determine the degree of regularization applied; setting these values to zero effectively disables the regularization term. \[ \mathrm{MAE}(X,W) = \frac{1}{N} \sum_{i=1}^N | h_W(x_i) - y_i | + \alpha \ell_1 + \beta \ell_2 \]

Guidelines

- \(\ell_1\) Regularization:

- Promotes sparsity, setting many weights to zero.

- Useful for feature selection by reducing feature reliance.

- \(\ell_2\) Regularization:

- Promotes small, distributed weights for stability.

- Ideal when all features contribute and reducing complexity is key.

Keras example

Dropout

Dropout is a regularization technique in neural networks where randomly selected neurons are ignored during training, reducing overfitting by preventing co-adaptation of features.

Dropout

During each training step, each neuron in a dropout layer has a probability \(p\) of being excluded from the computation, typical values for \(p\) are between 10% and 50%.

While seemingly counterintuitive, this approach prevents the network from depending on specific neurons, promoting the distribution of learned representations across multiple neurons.

Dropout

Dropout is one of the most popular and effective methods for reducing overfitting.

The typical improvement in performance is modest, usually around 1 to 2%.

Keras

import keras

from keras.models import Sequential

from keras.layers import InputLayer, Dropout, Flatten, Dense

model = tf.keras.Sequential([

InputLayer(shape=[28, 28]),

Flatten(),

Dropout(rate=0.2),

Dense(300, activation="relu"),

Dropout(rate=0.2),

Dense(100, activation="relu"),

Dropout(rate=0.2),

Dense(10, activation="softmax")

])Definition

Early stopping is a regularization technique that halts training once the model’s performance on a validation set begins to degrade, preventing overfitting by stopping before the model learns noise.

Early Stopping

Prologue

Summary

- Loss Functions:

- Regression Tasks: Mean Squared Error (MSE).

- Classification Tasks: Cross-Entropy Loss with Softmax activation for multi-class outputs.

- Regularization Techniques:

- L1 and L2 Regularization: Add penalty terms to the loss to discourage large weights.

- Dropout: Randomly deactivate neurons during training to prevent overfitting.

- Early Stopping: Halt training when validation performance deteriorates.

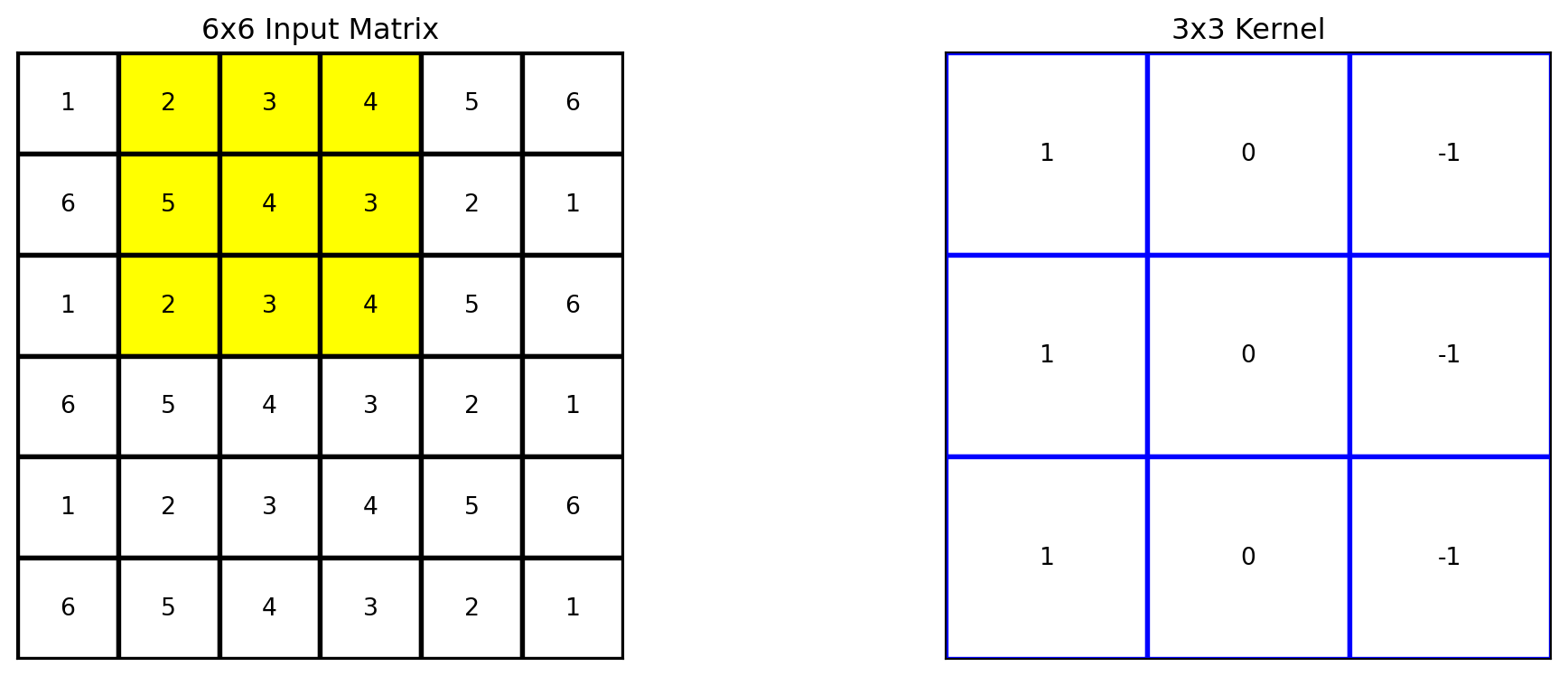

Next lecture

- We will introduce convolutional neural networks.

References

Marcel Turcotte

School of Electrical Engineering and Computer Science (EECS)

University of Ottawa